Videos

|

This is an endpoint AI Gesture Recognition application developed with machine learning technology which runs on the NuMicro® M55M1 microcontroller. |

|

This is an endpoint AI Facial Landmark Detection application developed with machine learning technology which runs on the NuMicro® M55M1 microcontroller. |

|

This is an endpoint AI Pose Landmark Detection application developed with machine learning technology which runs on the NuMicro® M55M1 microcontroller. |

|

Development Board Introduction |

|

This is a medicine classification application with NuMicro® M55M1 and the NuEdgeWise tool. |

|

Voice Control Scope (Mandarin) This is a Keyword Spotting application with NuMicro® M467 and the NuEdgeWise tool |

|

Warehouse Management System (Mandarin) This is an Object Classification application with NuMicor® MA35D1 and the NuEdgeWise tool |

|

This is a Face Detection and Number Counting application with NuMicro® MA35D1 |

|

Auto Plate Billing (Mandarin) This is an Object Detection with NuMicor® MA35D1 and the NuEdgeWise tool

|

|

Nuvoton Endpoint AI Introduction |

|

Nuvoton Endpoint AI Platform Features |

|

Nuvoton Machine Learning Ecosystem |

|

NuEdgeWise Tool helps users train models |

|

Machine Learning Application Design for users' references |

NuEdgeWise : Machine learning development environment for Nuvoton AI

The Nuvoton NuEdgeWise IDE, launched by Nuvoton Technology, is a machine-learning tool designed explicitly for TinyML development. It supports the four main stages of machine learning application development: labeling, training, validation, and testing. NuEdgeWise leverages the Jupyter Notebook platform, allowing developers to train and deploy models on Nuvoton's microcontrollers and microprocessors using TensorFlow Lite, making TinyML applications more accessible and easier to implement.

Development process:

|

Step I |

|

Data is labeled or categorized during this stage, which is crucial for training machine learning models. For instance, image data might be tagged as containing specific objects or scenes, while audio data could be labeled based on the type of sound, and sensor data might be marked according to specified sensor raw data. This process usually requires manual effort to ensure the accuracy and consistency of the data. |

|---|---|---|

|

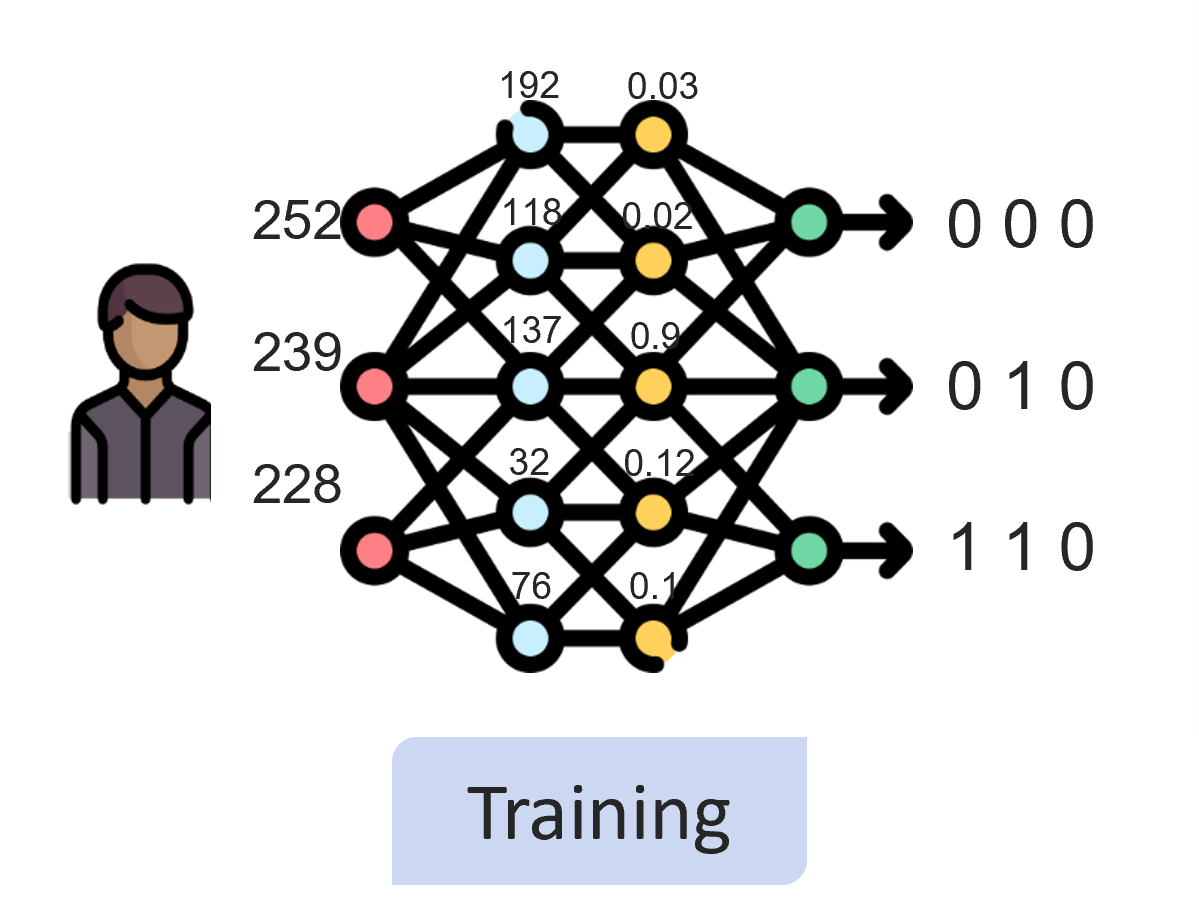

Step II |

|

Machine learning models are trained using the labeled data. In this step, Nuvoton also provides pre-defined models so that users can quickly train their models. The trained models can then make predictions or classifications on unseen data. Training typically requires a substantial amount of data and computational resources. |

|

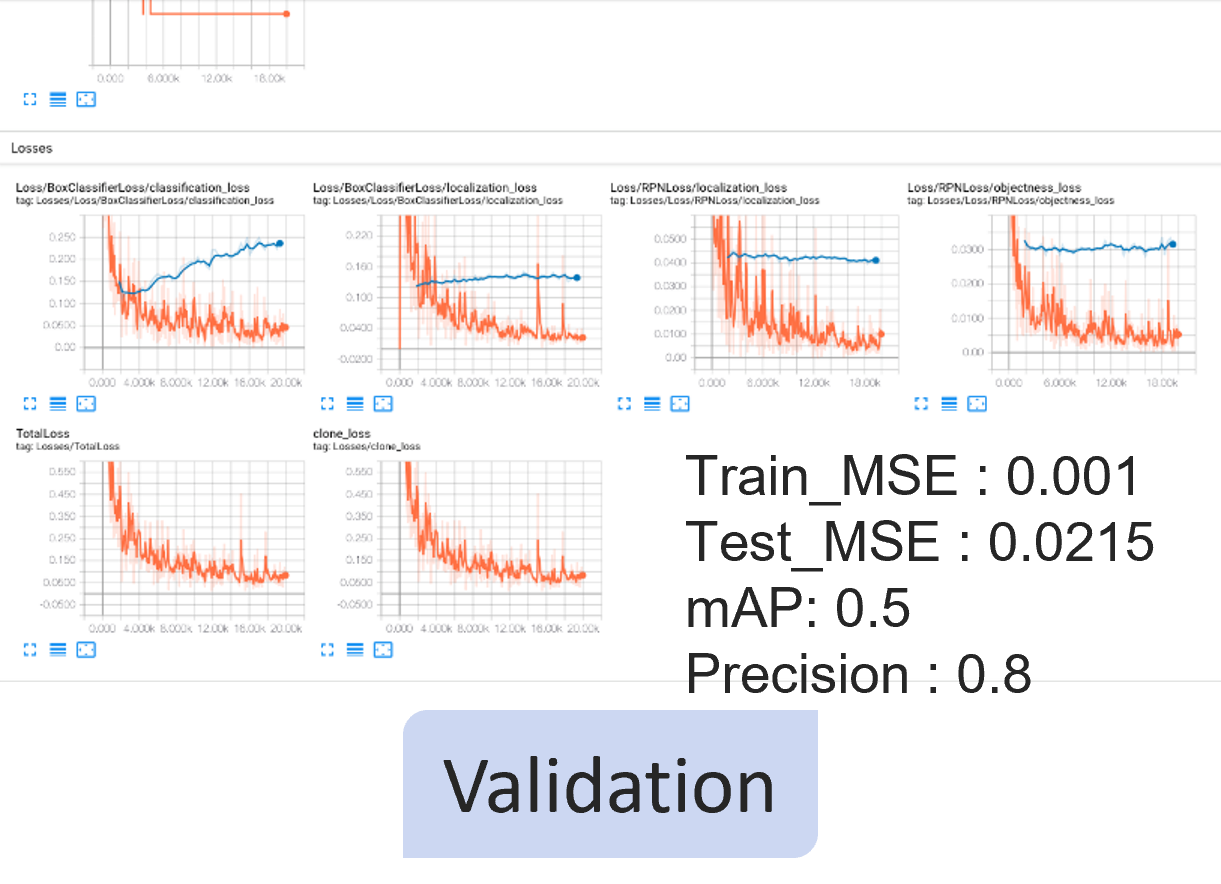

Step III |

|

The validation stage assesses the model's performance on datasets not involved in the training process. This helps to determine the accuracy and generalization ability of the model. Typically, a portion of the data not used for training is utilized for this evaluation stage. |

|

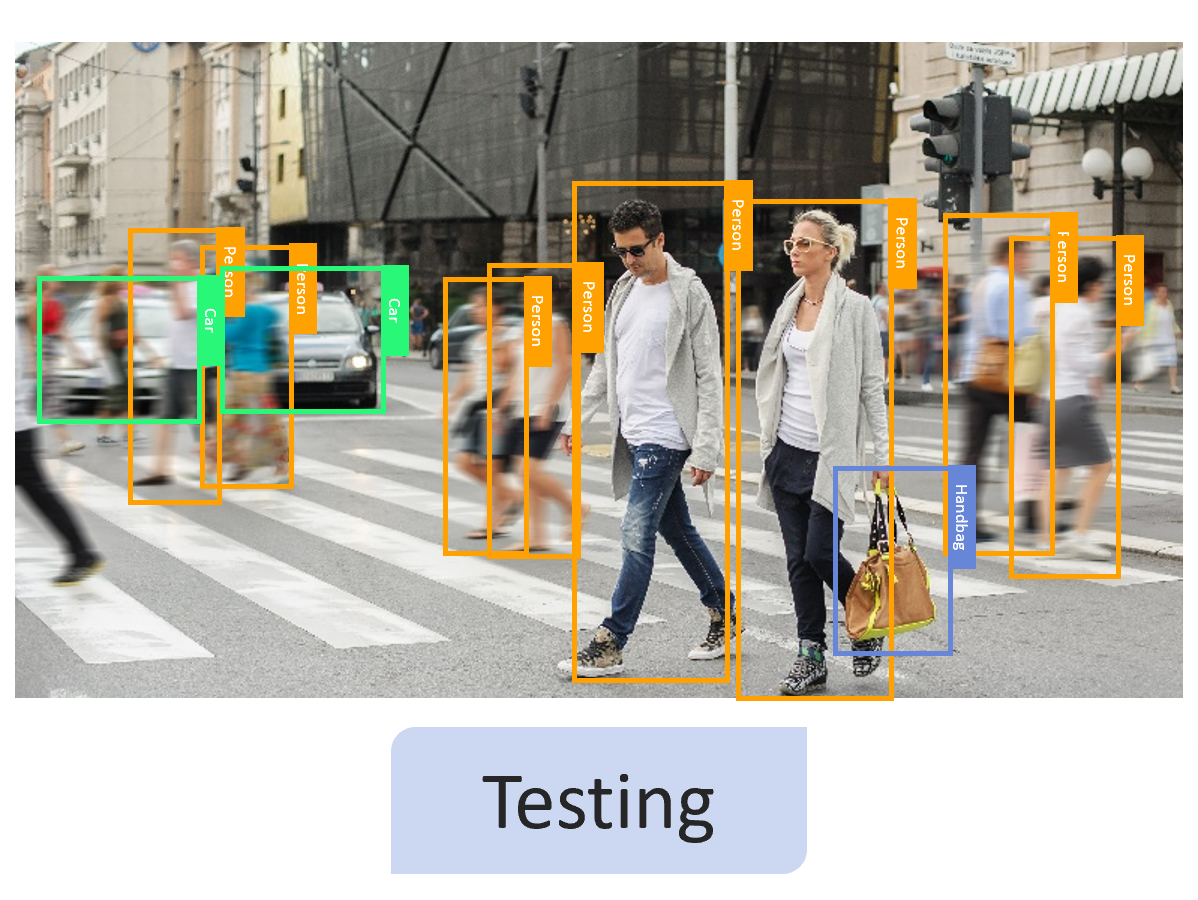

Step IV |

|

The final testing phase further evaluates the model's performance on a completely independent dataset. This is a crucial step to check whether the model understands the data features rather than just memorizing the training data. Successful testing means that the model is ready for application in the real world. |

AI Applications

Nuvoton Technology has introduced seven different development scenarios, easily taking the first step in AI application development:

| Applications | Model | Introduction | Development Tools |

M467 | MA35D1 | M55M1 |

|---|---|---|---|---|---|---|

|

Keyword Spotting |

DNN/DS-CNN |

This application focuses on Keyword Spotting (KWS) technology, exploring how to train Depthwise Separable Convolutional Neural Networks (DSCNN) or traditional Deep Neural Networks (DNN) by collecting specific sound data. The application will demonstrate the entire process, from data collection, preprocessing, and feature extraction to model training. The goal is to develop a model capable of accurately detecting and responding to specific voice commands suitable for various voice interaction applications such as smart assistants and voice control systems to enhance user interaction experiences. |

✓ |

✗ |

✓ |

|

|

Gesture Recognition |

CNN |

A Convolutional Neural Network (CNN) model is trained to recognize different gestures using a three-dimensional accelerometer to collect gesture motion data. This model is then deployed onto a development board for real-time gesture recognition. |

✓ |

✗ |

✓ |

|

|

Image Classification |

MobileNet |

This application delves into the methods and practices of using the MobileNet model for image classification. MobileNet, as a lightweight deep learning architecture, is particularly suitable for devices with limited computational resources. The article starts with the model's basic structure, explaining how MobileNet reduces computational load and model size through depthwise separable convolutions while maintaining efficient classification performance. Subsequently, the article will introduce how to train MobileNet using public datasets, including steps for data preprocessing, model training, and performance optimization. Additionally, it will explore how to deploy the trained model on different platforms, including cloud services and edge computing devices. Finally, the application of MobileNet in image classification will be demonstrated through practical cases, such as object recognition and scene understanding, emphasizing its importance in real-time and high-efficiency image processing. This article will provide practical guidance and inspiration for readers who want to practice deep learning in image classification. |

✗ |

✓ |

✓ |

|

|

Object Detection |

SSD_MobileNet_ |

In this application, we will explore how to use the TensorFlow Object Detection API for image detection. This powerful API enables developers to easily train and deploy object recognition models suitable for various applications, from security surveillance to autonomous vehicles. The application will introduce the API basics, including setting up the development environment, preparing and annotating datasets, and training and evaluating models. Additionally, it will discuss how to apply trained models for real-time image detection and deploy them on various platforms and devices. This will provide a comprehensive guide and practical insights for developers who aspire to delve deeper into this field. |

✗ |

✓ |

✗ |

|

|

Object Detection |

Yolo-fastest v1 |

This application delves deeply into how to use the DarkNet framework to train the YOLO (You Only Look Once) model, which is suitable for edge computing. It focuses on converting the trained model into TensorFlow Lite format and enhancing its performance with the Vela optimization tool. The application will cover key steps in model training, the conversion process, and optimization techniques to help developers effectively detect various types of objects, especially suitable for resource-limited edge computing devices. |

✗ |

✗ |

✓ |

|

|

Anomaly Detection |

DNN/ |

This application details how to implement anomaly detection techniques to train Tiny ML (Micro Machine Learning) models and convert them into TensorFlow Lite (TFLite) format. The article will cover everything from data preprocessing and feature extraction to the application of anomaly detection algorithms, as well as how to optimize and convert the trained models into TFLite format for operation on resource-constrained devices. This approach suits applications requiring efficient and real-time anomaly detection, such as industrial monitoring or IoT (Internet of Things) devices. |

✓ |

✗ |

✓ |

|

|

Visual Wake Words |

Small MobileNet RGB/gray |

This application explores the implementation of Visual Wake Words (VWW) models on microcontrollers, focusing on identifying the presence of people or other specific objects in images. This approach utilizes lightweight deep-learning models designed to run on resource-constrained microcontrollers. The application will introduce how to effectively train and optimize such models and cover the process of deploying these models on microcontrollers. This provides a practical and efficient solution for developing low-power, real-time, responsive embedded visual applications. |

✓ |

✗ |

✓ |

Articles

|

Type |

Title |

|---|---|

|

Tech Blog |

【Endpoint AI】Implementing Pose Landmark Detection using the NuMicro® M55M1 ML MCU |

|

Tech Blog |

【Endpoint AI】Utilizing the NuMicro® M55M1 ML MCU for Facial Landmark Detection Applications |

|

Tech Blog |

【Endpoint AI】NuEzAI-M55M1 Development Board: A Powerful Tool for Simplifying AI Development |

|

Tech Blog |

【Endpoint AI】Nuvoton Gesture Recognition: A High-Performance Application For MCU AI |

|

Tech Blog |

|

|

Tech Blog |

|

|

Tech Blog |

|

|

Tech Blog |

|

|

Tech Blog |

|

|

Press Release |

|

|

Press Release |

|

|

Press Release |

2024-01-05 Nuvoton Unveils New Production-Ready Endpoint AI Platform for Machine Learning |

|

Press Release |

2023-06-27 Nuvoton and Skymizer Achieve MLPerf Tiny Benchmark Leadership Using NuMaker-M467HJ |